EC2 Backup and Restore

发布于 2015-09-14 15:25:57 | 239 次阅读 | 评论: 0 | 来源: 网络整理

This page describes how to backup, verify, and restore a MongoDB running on EC2 using EBS Snapshots.

How you backup MongoDB will depend on whether you are using the --journal option, which is available in versions 1.8 and above.

Backup with –journal¶

The journal file allows for roll forward recovery. The journal files are located in the dbpath directory so will be snapshotted at the same time as the database files.

If the dbpath is mapped to a single EBS volume then proceed to Backup the Database Files.

If the dbpath is mapped to multiple EBS volumes, then in order to guarantee the stability of the file-system then you will need to Flush and Lock the Database.

注解

Snapshotting with the journal is only possible if the journal resides on the same volume as the data files, so that one snapshot operation captures the journal state and data file state atomically.

Backup without –journal¶

In order to correctly backup a MongoDB, you need to ensure that writes are suspended to the file-system before you backup the file-system. If writes are not suspended then the backup may contain partially written or data which may fail to restore correctly.

Depending on your version of MongoDB, use fsync and lock or, after MongoDB 2.0, use db.fsyncLock().

If the file-system is being used only by the database, then you can use the snapshot facility of EBS volumes to create a backup. If you are using the volume for any other application then you will need to ensure that the file-system is frozen as well (e.g. on XFS file-system use xfs_freeze) before you initiate the EBS snapshot.

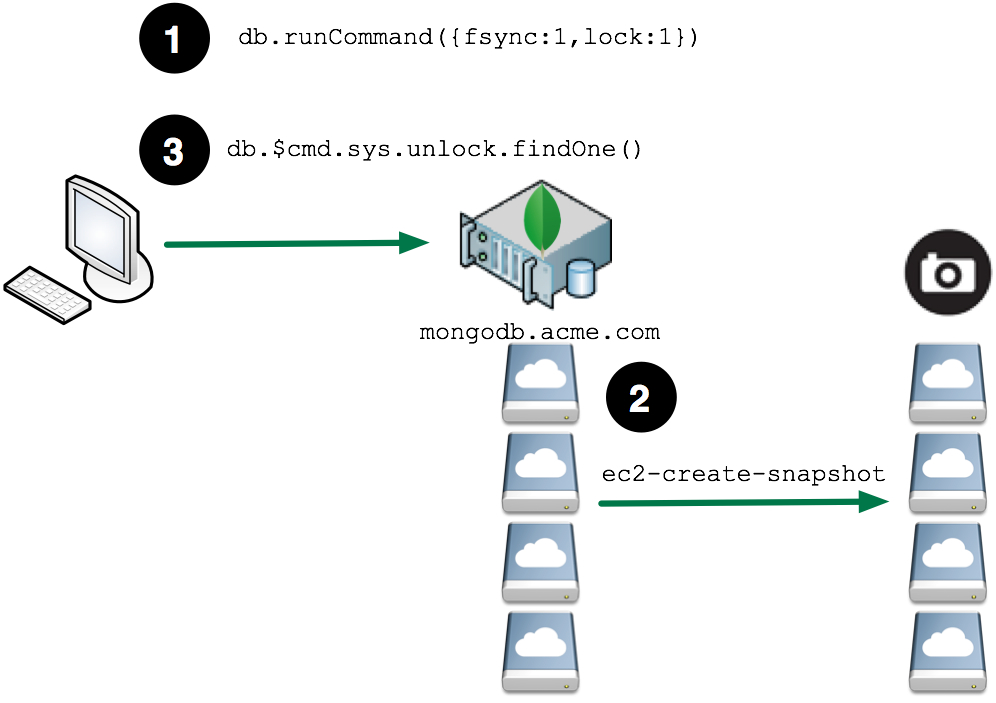

The overall process looks like:

Flush and Lock the Database¶

Writes have to be suspended to the file-system in order to make a stable copy of the database files.

Prior to MongoDB version 2.0, this is achieved through the MongoDB shell using fsync and lock:

mongo shell> use admin

mongo shell> db.runCommand({fsync:1,lock:1});

{

"info" : "now locked against writes, use db.$cmd.sys.unlock.findOne() to unlock",

"ok" : 1

}

MongoDB 2.0 added the db.fsyncLock() method to lock the database and flush writes to disk and added the db.fsyncUnlock() method to unlock the database after the snapshot has completed.

During the time the database is locked, any write requests that this database receives will be rejected. Any application code will need to deal with these errors appropriately.

Backup the Database Files¶

There are several ways to create an EBS Snapshot. The following examples use the AWS command line tool.

Find the EBS Volumes Associated with MongoDB¶

If the mapping of EBS Block devices to the MongoDB data volumes is already known, then this step can be skipped. The example below shows how to determine the mapping for an LVM volume, please confirm with your System Administrator how the original system was setup if you are unclear.

Find the EBS Block Devices Associated with the Running Instance¶

shell> ec2-describe-instances

RESERVATION r-eb09aa81 289727918005 tokyo,default

INSTANCE i-78803e15 ami-4b4ba522 ec2-50-16-30-250.compute-1.amazonaws.com ip-10-204-215-62.ec2.internal running scaleout 0 m1.large 2010-11-04T02:15:34+0000 us-east-1a aki-0b4aa462 monitoring-disabled 50.16.30.250 10.204.215.62 ebs paravirtual

BLOCKDEVICE /dev/sda1 vol-6ce9f105 2010-11-04T02:15:43.000Z

BLOCKDEVICE /dev/sdf vol-96e8f0ff 2010-11-04T02:15:43.000Z

BLOCKDEVICE /dev/sdh vol-90e8f0f9 2010-11-04T02:15:43.000Z

BLOCKDEVICE /dev/sdg vol-68e9f101 2010-11-04T02:15:43.000Z

BLOCKDEVICE /dev/sdi vol-94e8f0fd 2010-11-04T02:15:43.000Z

As can be seen in this example, there are a number of block devices associated with this instance. You must determine which volumes make up the file-system to snapshot.

Determine how the dbpath is Mapped to the File System¶

Log onto the running MongoDB instance in EC2. To determine where the database file are located, either look at the startup parameters for the mongod process or if mongod is running, then you can examine the running process. In the following example, dbpath is set to /var/lib/mongodb/tokyo0.

root> ps -ef | grep mongo

ubuntu 10542 1 0 02:17 ? 00:00:00 /var/opt/mongodb/current/bin/mongod --port 27000 --shardsvr --dbpath /var/lib/mongodb/tokyo0 --fork --logpath /var/opt/mongodb/log/server.log --logappend --rest

Map the dbpath to the Physical Devices¶

Using the df command, determine what the --dbpath directory is mapped to

root> df /var/lib/mongodb/tokyo0

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/data_vg-data_vol

104802308 4320 104797988 1% /var/lib/mongodb

Next determine the logical volume associated with this device. In the example above, this is /dev/mapper/data_vg-data_vol.

root> lvdisplay /dev/mapper/data_vg-data_vol

--- Logical volume ---

LV Name /dev/data_vg/data_vol

VG Name data_vg

LV UUID fixOyX-6Aiw-PnBA-i2bp-ovUc-u9uu-TGvjxl

LV Write Access read/write

LV Status available

# open 1

LV Size 100.00 GiB

...

This output indicates the volume group associated with this logical volume, in this example data_vg. Next determine how this maps to the physical volume.

root> pvscan

PV /dev/md0 VG data_vg lvm2 [100.00 GiB / 0 free]

Total: 1 [100.00 GiB] / in use: 1 [100.00 GiB] / in no VG: 0 [0 ]

From the physical volume, determine the associated physical devices, in this example /dev/md0.

root> mdadm --detail /dev/md0

/dev/md0:

Version : 00.90

Creation Time : Thu Nov 4 02:17:11 2010

Raid Level : raid10

Array Size : 104857472 (100.00 GiB 107.37 GB)

Used Dev Size : 52428736 (50.00 GiB 53.69 GB)

Raid Devices : 4

...

UUID : 07552c4d:6c11c875:e5a1de64:a9c2f2fc (local to host ip-10-204-215-62)

Events : 0.19

Number Major Minor RaidDevice State

0 8 80 0 active sync /dev/sdf

1 8 96 1 active sync /dev/sdg

2 8 112 2 active sync /dev/sdh

3 8 128 3 active sync /dev/sdi

The block devices /dev/sdf through /dev/sdi make up this physical devices. Each of these volumes will need to be snapped in order to complete the backup of the file-system.

Create the EBS Snapshot¶

Create the snapshot for each devices. Using the ec2-create-snapshot command, use the Volume Id for the device listed by the ec2-describe-instances command.

shell> ec2-create-snapshot -d backup-20101103 vol-96e8f0ff

SNAPSHOT snap-417af82b vol-96e8f0ff pending 2010-11-04T05:57:29+0000 289727918005 50 backup-20101103

shell> ec2-create-snapshot -d backup-20101103 vol-90e8f0f9

SNAPSHOT snap-5b7af831 vol-90e8f0f9 pending 2010-11-04T05:57:35+0000 289727918005 50 backup-20101103

shell> ec2-create-snapshot -d backup-20101103 vol-68e9f101

SNAPSHOT snap-577af83d vol-68e9f101 pending 2010-11-04T05:57:42+0000 289727918005 50 backup-20101103

shell> ec2-create-snapshot -d backup-20101103 vol-94e8f0fd

SNAPSHOT snap-2d7af847 vol-94e8f0fd pending 2010-11-04T05:57:49+0000 289727918005 50 backup-20101103

Unlock the Database¶

After the snapshots have been created, the database can be unlocked. After this command has been executed the database will be available to process write requests.

mongo shell> db.$cmd.sys.unlock.findOne();

{ "ok" : 1, "info" : "unlock requested" }

Verify the Backup¶

In order to verify the backup, you must do the following:

- Check the status of each snapshot to ensure it is “completed.”

- Create new volumes based on the snapshots and mount the new volumes.

- Run mongod and verify the collections.

Typically, the verification is performed on another machine so that you do not burden your production systems with the additional CPU and I/O load of the verification processing.

Describe the Snapshots¶

Using the ec2-describe-snapshots command, find the snapshots that make up the backup. Using a filter on the description field, snapshots associated with the given backup are easily found. The search text used should match the text used in the -d flag passed to ec2-create-snapshot command when the backup was made.

backup shell> ec2-describe-snapshots --filter "description=backup-20101103"

SNAPSHOT snap-2d7af847 vol-94e8f0fd completed 2010-11-04T05:57:49+0000 100% 289727918005 50 backup-20101103

SNAPSHOT snap-417af82b vol-96e8f0ff completed 2010-11-04T05:57:29+0000 100% 289727918005 50 backup-20101103

SNAPSHOT snap-577af83d vol-68e9f101 completed 2010-11-04T05:57:42+0000 100% 289727918005 50 backup-20101103

SNAPSHOT snap-5b7af831 vol-90e8f0f9 completed 2010-11-04T05:57:35+0000 100% 289727918005 50 backup-20101103

Create New Volumes Based on the Snapshots¶

Using the ec2-create-volume command, create a new volumes based on each of the snapshots that make up the backup.

backup shell> ec2-create-volume --availability-zone us-east-1a --snapshot snap-2d7af847

VOLUME vol-06aab26f 50 snap-2d7af847 us-east-1a creating 2010-11-04T06:44:27+0000

backup shell> ec2-create-volume --availability-zone us-east-1a --snapshot snap-417af82b

VOLUME vol-1caab275 50 snap-417af82b us-east-1a creating 2010-11-04T06:44:38+0000

backup shell> ec2-create-volume --availability-zone us-east-1a --snapshot snap-577af83d

VOLUME vol-12aab27b 50 snap-577af83d us-east-1a creating 2010-11-04T06:44:52+0000

backup shell> ec2-create-volume --availability-zone us-east-1a --snapshot snap-5b7af831

VOLUME vol-caaab2a3 50 snap-5b7af831 us-east-1a creating 2010-11-04T06:45:18+0000

Attach the New Volumes to the Instance¶

Using the ec2-attach-volume command, attach each volume to the instance where the backup will be verified.

backup shell> ec2-attach-volume --instance i-cad26ba7 --device /dev/sdp vol-06aab26f

ATTACHMENT vol-06aab26f i-cad26ba7 /dev/sdp attaching 2010-11-04T06:49:32+0000

backup shell> ec2-attach-volume --instance i-cad26ba7 --device /dev/sdq vol-1caab275

ATTACHMENT vol-1caab275 i-cad26ba7 /dev/sdq attaching 2010-11-04T06:49:58+0000

backup shell> ec2-attach-volume --instance i-cad26ba7 --device /dev/sdr vol-12aab27b

ATTACHMENT vol-12aab27b i-cad26ba7 /dev/sdr attaching 2010-11-04T06:50:13+0000

backup shell> ec2-attach-volume --instance i-cad26ba7 --device /dev/sds vol-caaab2a3

ATTACHMENT vol-caaab2a3 i-cad26ba7 /dev/sds attaching 2010-11-04T06:50:25+0000

Mount the Volumes¶

Make the file-system visible on the host O/S. This will vary by the Logical Volume Manager, file-system etc. that you are using. The example below shows how to perform this for LVM, please confirm with your System Administrator on how the original system system was setup if you are unclear.

Assemble the device from the physical devices. The UUID for the device will be the same as the original UUID that the backup was made from, and can be obtained using the mdadm command.

backup shell> mdadm --assemble --auto-update-homehost -u

07552c4d:6c11c875:e5a1de64:a9c2f2fc --no-degraded /dev/md0

mdadm: /dev/md0 has been started with 4 drives.

You can confirm that the physical volumes and volume groups appear correctly to the O/S by executing the following:

backup shell> pvscan

PV /dev/md0 VG data_vg lvm2 [100.00 GiB / 0 free]

Total: 1 [100.00 GiB] / in use: 1 [100.00 GiB] / in no VG: 0 [0 ]

backup shell> vgscan

Reading all physical volumes. This may take a while...

Found volume group "data_vg" using metadata type lvm2

Create the mount point and mount the file-system:

backup shell> mkdir -p /var/lib/mongodb

backup shell> cat >> /etc/fstab << EOF

/dev/mapper/data_vg-data_vol /var/lib/mongodb xfs noatime,noexec,nodiratime 0 0

EOF

backup shell> mount /var/lib/mongodb

Start the Database¶

After the file-system has been mounted, MongoDB can be started. Ensure that the owner of the files is set to the correct user and group. Since the backup was made with the database running, the lock file will need to be removed in order to start the database.

backup shell> chown -R mongodb /var/lib/mongodb/toyko0

backup shell> rm /var/lib/mongodb/tokyo0/mongod.lock

backup shell> mongod --dbpath /var/lib/mongodb/tokyo0

Restore¶

Restore uses the same basic steps as the verification process:

Mount the file system.

Run mongod.

After the file-system is mounted you can decide to:

- Copy the database files from the backup into the current database directory

- Start mongod from the new mount point, specifying the new mount point in the --dbpath argument.

After the database is started, it will be ready to transact. It will be at the specific point in time from the backup, so if it is part of a master/slave or replica set relationship, then the instance will need to synchronize itself to get itself back up to date.